Lab 5 - Kubernetes Networking

Welcome to the lab 5. In this session, the following topics are covered:

- Kubernetes networking basics;

- Kubernetes Services;

- Kubernetes Ingresses;

- Kubernetes NetworkPolicies.

Kubernetes networking basics¶

Kubernetes manages several network-based communication models:

- Pod-to-Pod;

- Container-to-container;

- Pod-to-Service;

- External traffic to Service.

During this practice, you are going to discover first, third, and fourth models. The main entities we use during this journey are Service, NetworkPolicy and Ingress.

Use-case development¶

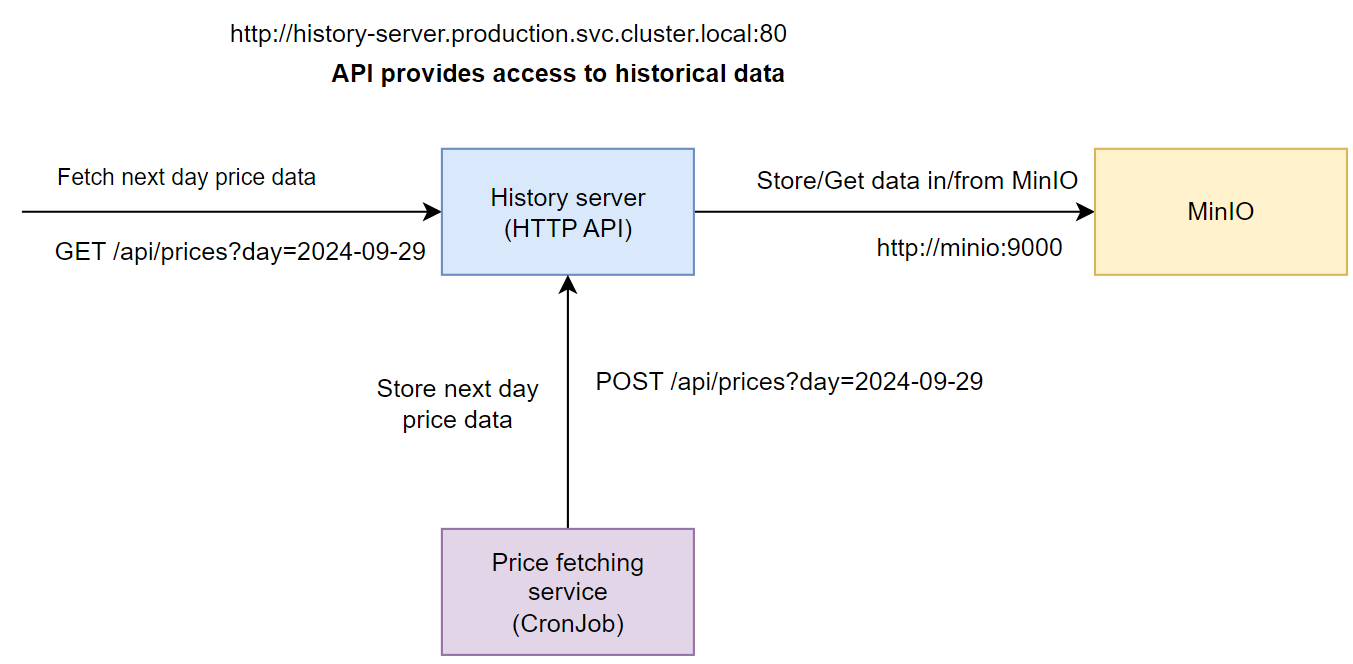

Before starting the main part of the lab, you will need to implement a history data server application and update the price fetcher script to send data directly to the history data sever over HTTP.

History data server¶

Info

This app should be a HTTP API server with stores the price records in MinIO and exposes this data via JSON interface. The server should have the following endpoints:

- GET

/api/prices?day=<date>- find file for prices for the specified date (format should beday=2025-09-29) in MinIO and return them as a response in JSON format; - POST

/api/prices?day=<date>- receive prices in JSON format for the specified date and save the data as a file in MinIO.

Complete

Implement the HTTP API server application. You can use any programming language or technologies you want. If you are unsure which approach to use, it is recommended to use either Python FastAPI or Flask frameworks.

An OpenAPI specification for the API has also been prepared, so feel free to generate the HTTP API implementation in any suported languages and implement the logic of the HTTP methods. You can find the API specification here: History server OpenAPI specification

You can find an example skeleton for the Python Flask API (where Minio specific methods are not completed) here: History server Flask example

The main requirement is that you use the exact endpoints that have been specifed and that the price data message JSON format is the following:

[

{

"estonian_time": "2025-10-01T00:00:00+03:00",

"price": 5.01,

"timestamp": 1727730000

},

{

"estonian_time": "2025-10-01T01:00:00+03:00",

"price": 3.21,

"timestamp": 1727733600

},

{

"estonian_time": "2025-10-02T00:00:00+03:00",

"price": 78.57,

"timestamp": 1727816400

}

]

For the MinIO integration, use the official MinIO libraries: Software Development Kits. For example, the Python Quickstart Guide provides reasonable examples to start with if you chose to use Python. MinIO library methods put_object, get_object, bucket_exists, and make_bucket should be enough to implement everything needed.

MinIO connection authentication variables (ACCESS_KEY, SECRET_KEY, and MinIO URI should be read in from the enviorenment variables and not hard coded). Also, when storing the data in MinIO, you can use the value of the day variable (For example 2025-09-29) as part of the filename. Data should be stored in the price-data MinIo bucket. It would be best if the application also checks that the price-data bucket exists and creates it if it does not exist.

After the code is ready and tested, publish the Docker image to the Docker hub. Name of the Docker image in DockerHub should be history-server.

Verify

When testing the solution manually, you can use the following requests to verify it works. Just make sure to replace the IP address in the example request with the correct container or pod IP or localhost:

curl -X POST -d '[ { "estonian_time": "2025-09-28T00:00:00+03:00", "price": 5.01, "timestamp": 1727730000 }, { "estonian_time": "2025-09-28T01:00:00+03:00", "price": 3.21, "timestamp": 1727733600 }, { "estonian_time": "2024-09-28T00:00:00+03:00", "price": 78.57, "timestamp": 1727816400 } ]' --header "Content-Type: application/json" http://10.0.1.130:8080/api/prices?day=2025-09-27

And then checking if the data is available with GET request:

curl http://10.0.1.130:8080/api/prices?day=2025-09-27

Warning

NB! If you get stuck with implementing the use case components, ask for help from the lecturers.

Price-fetcher¶

Info

You need to update the price fetcher script so that instead of saving prices locally, it sends the data to the history data server in a JSON format using a HTTP POST method.

Complete

Configure the price fetcher script to read the address of the History data server from system enviorenment variable named HISTORY_SERVER_URI. We will later configure this value through the Kubernetes CronJob manifest when we create a Kubernetes Service for the history data server.

Inside the script, once the data has been collected from Elering API, prepare a JSON, and send a POST request (For example using the requests.post() method in Python) at the History data server API endpoint address HISTORY_SERVER_URI/api/prices?day={tomorrow}, where the tomorrow variable format is 2025-09-29.

Prepare the data in the same JSON format as shown in the previous section and attach it as the request body to the HTTP POST request. Hint: It is ok to replace Elering CSV API request with a similiar JSON request to avoid CSV to JSON parsing steps.

Services¶

Services are helpful when a single point of access for a Pod/set of Pods is needed. Let's create a simple Service for MinIO in the production namespace:

apiVersion: v1

kind: Service

metadata:

name: minio

spec:

type: ClusterIP #(1)

selector: #(2)

app: minio

ports: #(3)

- port: 9000

targetPort: 9000

spec.typeensures the app available within some scope. In case ofClusterIP, a Service exposes the app within the cluster only.spec.selectorensures the service forwards a traffic to Pods with the provided labels;spec.portsspecifies the list of ports available for the end user (portfield) and maps them to ports of a container (targetPortfield).

History-data-server will access MinIO through internal network, hence it makes sense to expose MinIO cluster-wise only (type is ClusterIP).

You can view the pods targeted by this server:

kubectl get pod -l app=minio -n production

# NAME READY STATUS RESTARTS AGE

# minio-0 1/1 Running 0 5d23h

Info

There are different options for Service type:

ClusterIP- Kubernetes assigns an IP from a set available within a cluster only;NodePort- each node in Kubernetes cluster reserves specified ports and forwards traffic to the Service;LoadBalancer- Kubernetes relies on an external load balancer, ignored in the lab;ExternalName- a Service is mapped to a specified DNS name, ignored in the lab;

Cluster IP¶

Complete

Inspect an IP address of the echoserver Service (Test Kubernetes from lab3):

kubectl describe service/echoserver -n default

# Name: echoserver

# Namespace: default

# ...

# IP: 10.106.242.153

# IPs: 10.106.242.153

# Port: <unset> 80/TCP

# ...

The IP above is cluster-scoped IP meaning it is available only within the cluster.

Verify

You can check if this endpoint actually works via curl tool:

# replace the IP address with one of your service

curl 10.104.159.196:80

# {"host":{"hostname":"...

The output above shows, the endpoint is accessible.

ClusterIP is practical when an app should be available internally, but there is also a possibility to allow traffic from outside the cluster. For this, Ingress resource is required and reviewed in the second part of the lab.

Complete

In the production namespace:

- create a

history-serverDeployment for the history server app using the image you have built previously; - create a ClusterIP-type Service (name -

history-server, listening port - 80) for the Deployment and validate if it works usingcurl; - build the adjusted price-fetcher app, which sends price data to the history-server endpoint, and update the existing CronJob.

NB:

- Please, use the following labels for the history-server Deployment:

app=electricity-calculator,microservice=history-server. Make sure to add them both to the metadata of the Deployment and to the metadata of the pod template; - The

history-serverDeployment should use the MinIO credentials from theminio-secretto set up necessary system enviorenment variables, similarily how they were defined for the MinIO StatefulSet int he previous lab. - Update the price-fetcher

CronJobspecification and add a system enviorenment variable (env:) namedHISTORY_SERVER_URI, which defines how to connect to thehistory server. Its value should be the Service address of the history server:http://history-server.production.svc.cluster.local:80

Hint: To reference the MinIO service use either minio:9000 or http://minio:9000, depending on whether the minio library (that you used) requires specifying protocol before the hostname or not. You can find the explanation in the service discovery section.

Info

You can look up status code of a simple response:

curl -I <Service IP>:80

# HTTP/1.1 200 OK

# ...

To validate integrity of the app microservices, you can trigger the new price fetching job manually (see the prevous lab) and view contents of the MinIO bucket using a client in the MinIO Pod. Example output:

mc ls default/price-data

[2025-09-29 17:51:53 UTC] 1.1KiB STANDARD 2025-09-30.csv

NodePort¶

The second major type of service is NodePort, which binds selected ports of each cluster node to ports of a Pod. A range of the node ports is between 30000 and 32767.

Complete

Let's create a NodePort service for the echoserver in the default namespace:

apiVersion: v1

kind: Service

metadata:

name: echoserver-service-nodeport

namespace: default

spec:

type: NodePort

selector:

app: echoserver

ports:

- port: 80

targetPort: 80

nodePort: 30001

It works as a previous service selecting Pods by app: echoserver label, but uses a different hostname (echoserver-service-nodeport) for discovery.

After inspecting it, you can see the network-specific data:

kubectl describe service/echoserver-service-nodeport

# ...

# IPs: 10.109.11.56

# Port: <unset> 80/TCP

# TargetPort: 80/TCP

# NodePort: <unset> 30001/TCP

# ...

Verify

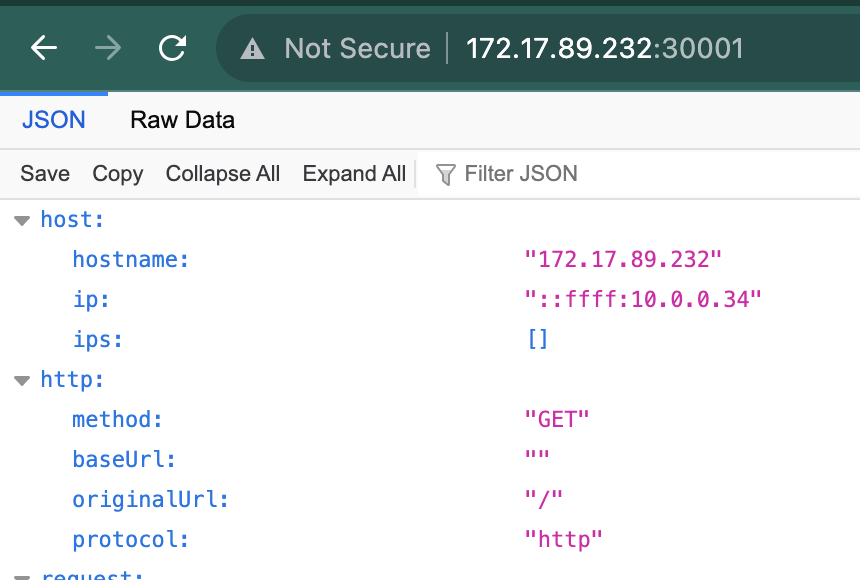

The server should be accessible via node address now:

curl 0.0.0.0:30001

# {"host":{"hostname":"0.0.0.0"...

Info

You are able to access the service from your browser using the public IP of your control plane node and the port 30001

You should see a similar page:

Headless Services¶

Headless Service is a service with no assigned cluster IPs. These services expose each Pod IP separately. Using such services, Pods can discover neighbour Pods by their IPs. For example, Pods managed by a StatefulSet can check availability of each other using IPs exposed by a headless service. Also, using headless services, end-users can access specific Pods for writing and others - for reading. An example is PostgreSQL leader-follower replication: all the writes should go to the leader while reads can be handled by the follower.

A headless service doesn't provide load balancing capabilities and a user can access a specific Pod with this hostname format: <pod-name>.<service-name>.

Complete

Let's create a PostgreSQL StatefulSet with this config:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: postgresql-hl-test

namespace: test

spec:

selector:

matchLabels:

app: postgresql-hl-test

serviceName: postgresql-hl #(1)

replicas: 2 #(2)

template:

metadata:

labels:

app: postgresql-hl-test

spec:

containers:

- name: postgres

image: postgres:17.6

imagePullPolicy: "Always"

env: #(3)

- name: POSTGRES_DB

value: "test"

- name: POSTGRES_USER

value: "test"

- name: POSTGRES_PASSWORD

value: "postgres-password"

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: postgredb

ports:

- containerPort: 5432

name: postgres-port

volumes: #(4)

- name: postgredb

emptyDir: {}

- A name of the headless service created later; used for service discovery between created Pods

- Number of PostgreSQL replicas

- Environment variables for PostgreSQL database setup

- PostgreSQL doesn't use any persistent storage for now

Also, let's create a headless service with the aforementioned name.

apiVersion: v1

kind: Service

metadata:

name: postgresql-hl

namespace: test

spec:

clusterIP: None

selector:

app: postgresql-hl-test

ports:

- port: 5432

targetPort: 5432

You can check the created endpoints and see one name has two linked endpoints:

kubectl get endpoints -n test

# NAME ENDPOINTS AGE

# postgresql-hl 10.0.1.213:5432,10.0.2.190:5432 12m

The description of the postgresql-hl service shows 2 endpoints for the postgresql Pods, meaning, you can access each one separately.

...

IP: None

IPs: None

Port: <unset> 5432/TCP

TargetPort: 5432/TCP

Endpoints: 10.0.1.181:5432,10.0.1.42:5432

...

For testing, let's use one of 2 pods to connect to another:

kubectl exec -it postgresql-hl-test-0 -n test -- /bin/bash

# ...

export PGPASSWORD='postgres-password'

psql -h postgresql-hl-test-1.postgresql-hl -U test

# psql (15.4)

# Type "help" for help.

# test=>

After testing, you should see successful connection to the database.

Ingresses¶

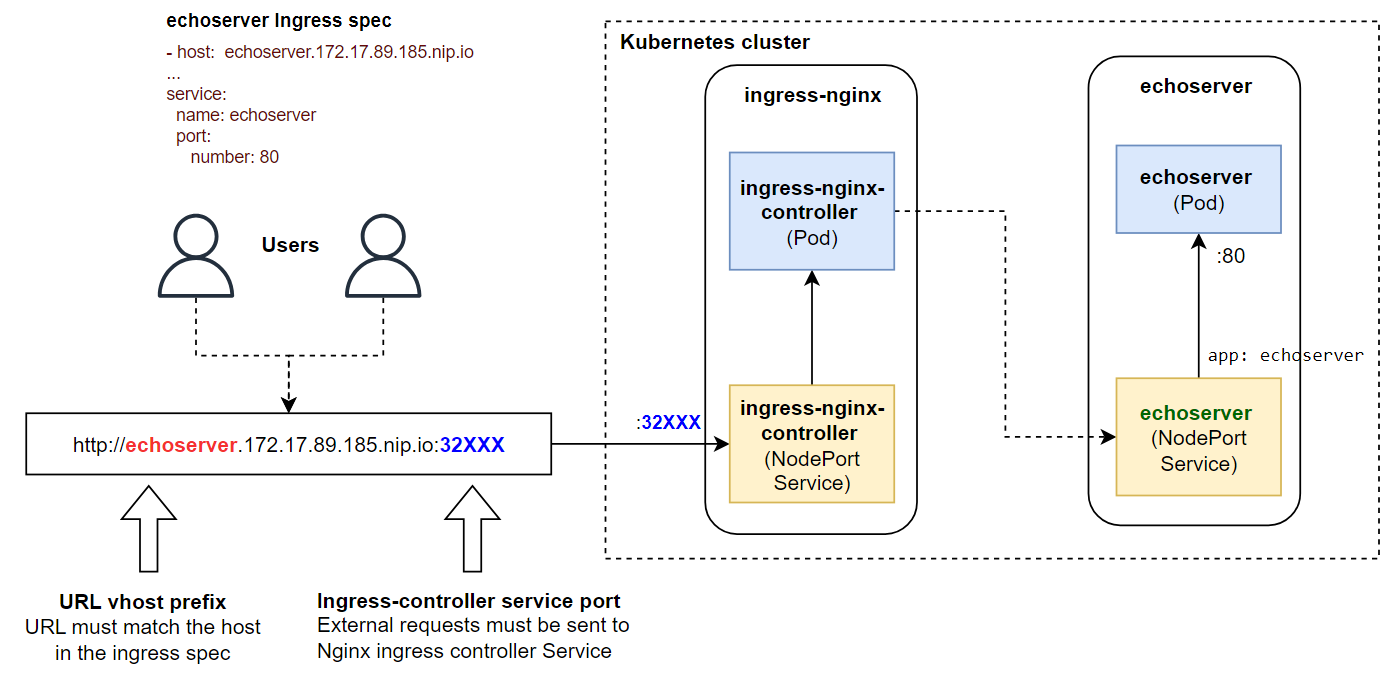

Ingress is a resource that controls external access to the services in a Kubernetes cluster.

To route the network traffic, a cluster needs an ingress controller. The primary focus of this lab is NGINX controller, while Kubernetes supports many of them.

Essentially, this controller is a Deployment with a NodePort service exposing 80 and 443 ports.

Complete

First of all, let's install the controller:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.13.2/deploy/static/provider/baremetal/deploy.yaml

All the required resources (Deployment, Service, etc.) should be available in the ingress-nginx namespace.

Verify

When you list Pods in the new ingress-nginx namespace you should see 2 completed jobs and a running Pod:

kubectl get pods -n ingress-nginx

# NAME READY STATUS RESTARTS AGE

# ingress-nginx-admission-create-pn564 0/1 Completed 0 2m

# ingress-nginx-admission-patch-hvz7h 0/1 Completed 0 2m

# ingress-nginx-controller-79bc9f5df8-rzdqr 1/1 Running 0 2m

Also, 2 services should exist. The most important one is ingress-nginx-controller:

kubectl get service -n ingress-nginx

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# ingress-nginx-controller NodePort 10.96.155.146 <none> 80:32375/TCP,443:30650/TCP 135m

# ingress-nginx-controller-admission ClusterIP 10.96.24.140 <none> 443/TCP 135m

Before we continue, you should check the port from the service (32375 in the example):

NODEPORT=$(kubectl get -n ingress-nginx service/ingress-nginx-controller -o jsonpath='{.spec.ports[0].nodePort}')

echo $NODEPORT

# 32375

This is a node port which the nginx controller listens for the incoming traffic. We are going to use it for ingress validation later.

Complete

Let's create an Ingress for echoserver:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: echoserver-ingress

labels:

name: echoserver-ingress

spec:

ingressClassName: "nginx"

rules: #(5)

- host: echoserver.PUBLIC_NODE_IP.nip.io #(1)

http:

paths:

- pathType: Prefix

path: "/" #(2)

backend:

service:

name: echoserver #(3)

port:

number: 80 #(4)

- Hostname for the service. Please, replace the

PUBLIC_NODE_IPwith actual IP of your control-plane node. - Ingress allows different paths for services, in this lab we use only root

- Service name to lookup

- Service port to target

nip.ioforwards traffic to the publicly available IP on third domain level (PUBLIC_NODE_IP in the example)

Verify

After some time after creation, you are able to test the Ingress via browser. Go to http://echoserver.PUBLIC_NODE_IP.nip.io:NODEPORT, where PUBLIC_NODE_IP - external node IP you use for ingress hostname, NODEPORT - value we discovered previously. You should see the response similar to one we discovered in the NodePort section.

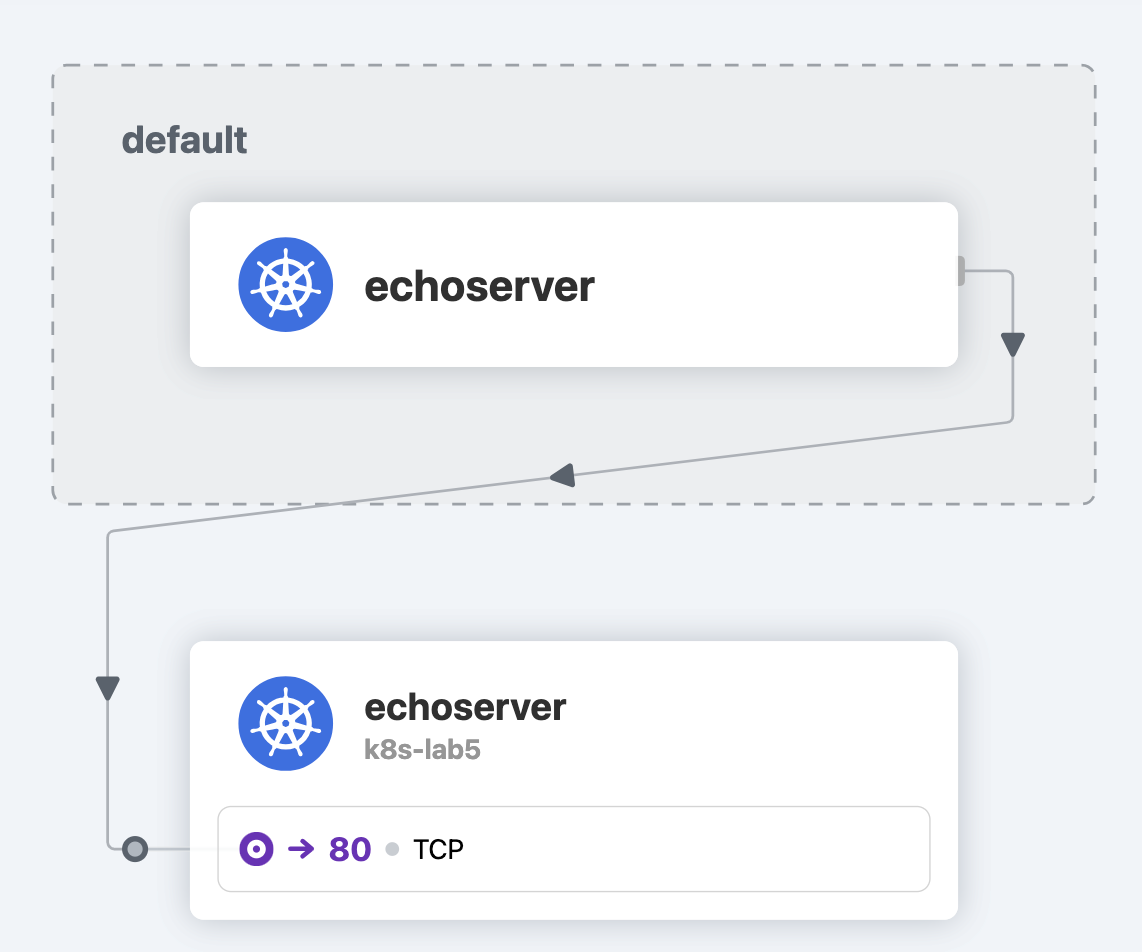

Service Discovery and Network Policies¶

Service Discovery in Kubernetes¶

In Kubernetes, service discovery works in 2 ways:

- Referring a service by a short name (

metadata.name). It works only for the services within the same namespace, for example:echoserver - Referring a service by a fully qualified name. It works for cross-namespace discovery and requires

<service-name>.<namespace>.svc.<cluster>:<service-port>format. Example:echoserver.default.svc.cluster.local:80

Complete

Let's create a new namespace called k8s-lab5:

kubectl create namespace k8s-lab5

and deploy an additional echoserver Pod and Service with the same config as in lab3 (Service should have ClusterIP type).

```bash

kubectl apply -f echoserver-pod.yaml -n k8s-lab5

kubectl apply -f echoserver-service.yaml -n k8s-lab5

```

Verify

When the Pod is up, connect to the echoserver in the default namespace

kubectl exec -it echoserver -- sh

and send request to the Pod in k8s-lab5:

wget -q -O- http://echoserver.k8s-lab5.svc.cluster.local:80/

In the browser, you can open Hubble UI, select default namespace and view traffic coming from one echoserver Pod to another. If you set up Hubble in the 3rd lab, you can find the UI in http://<CONTROL_PLANE_EXTERNAL_IP>:31000/. The external IP located in ETAIS portal (Project -> Resources -> VM -> External IP)

NetworkPolicy Resource¶

Kubernetes uses NetworkPolicy to isolate Pods from unnecessary network connections. Essentially, a policy allows setting up traffic between selected Pods and:

- Pods from same namespace filtered by labels;

- All Pods from a different namespace containing selected labels;

- All IP addresses in the provided IP CIDR subnet.

Also, policies can work for both incoming (ingress) and outgoing (egress) traffic.

Complete

An example policy allowing access to echoserver in default namespace is:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: echoserver-network-policy

namespace: default

spec:

podSelector:

matchLabels:

app: echoserver

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels: #(1)

kubernetes.io/metadata.name: k8s-lab5

- podSelector:

matchLabels: #(2)

app: nginx

ports:

- protocol: TCP

port: 80

- All the Pods from

k8s-lab5namespace can access the Pod - All the Pods from the same namespace and labels

name=nginxcan access the Pod

Create a network policy via the manifest above in the default namespace.

NB: This policy could break the lab3-05 check in the scoring site. If this happens, please follow cleanup instructions after completion of the main content of this lab.

Verify

Try to access http://echoserver.default.svc.cluster.local:80/ endpoint from echoserver Pod in k8s-lab5:

kubectl exec -it -n k8s-lab5 echoserver -- sh

wget -q -O- http://echoserver.default.svc.cluster.local:80/

The result should be successful.

Create an NGINX Deployment in the default namespace similar to one from the previous lab. Ensure the labels are correct:

kubectl get pods -l app=nginx -n default

#NAME READY STATUS RESTARTS AGE

#nginx-64b87bb78-gdrnl 1/1 Running 0 5d

Now, let's see what happens, when a user sends a request to the endpoint from an nginx Pod:

kubectl exec -it deployment/nginx -n default -- bash

curl -v http://echoserver:80/

The response should be correct too.

The final check is to try accessing the pod from the NGINX pod in the test namespace.

kubectl exec -it deployment/nginx -n test -- bash

curl -v http://echoserver.default.svc.cluster.local:80/ --connect-timeout 5

# curl: (28) Failed to connect to echoserver.default.svc.cluster.local port 80 after 5001 ms: Timeout was reached

The NetworkPolicy blocks this connection, because it is not listed in the rules.

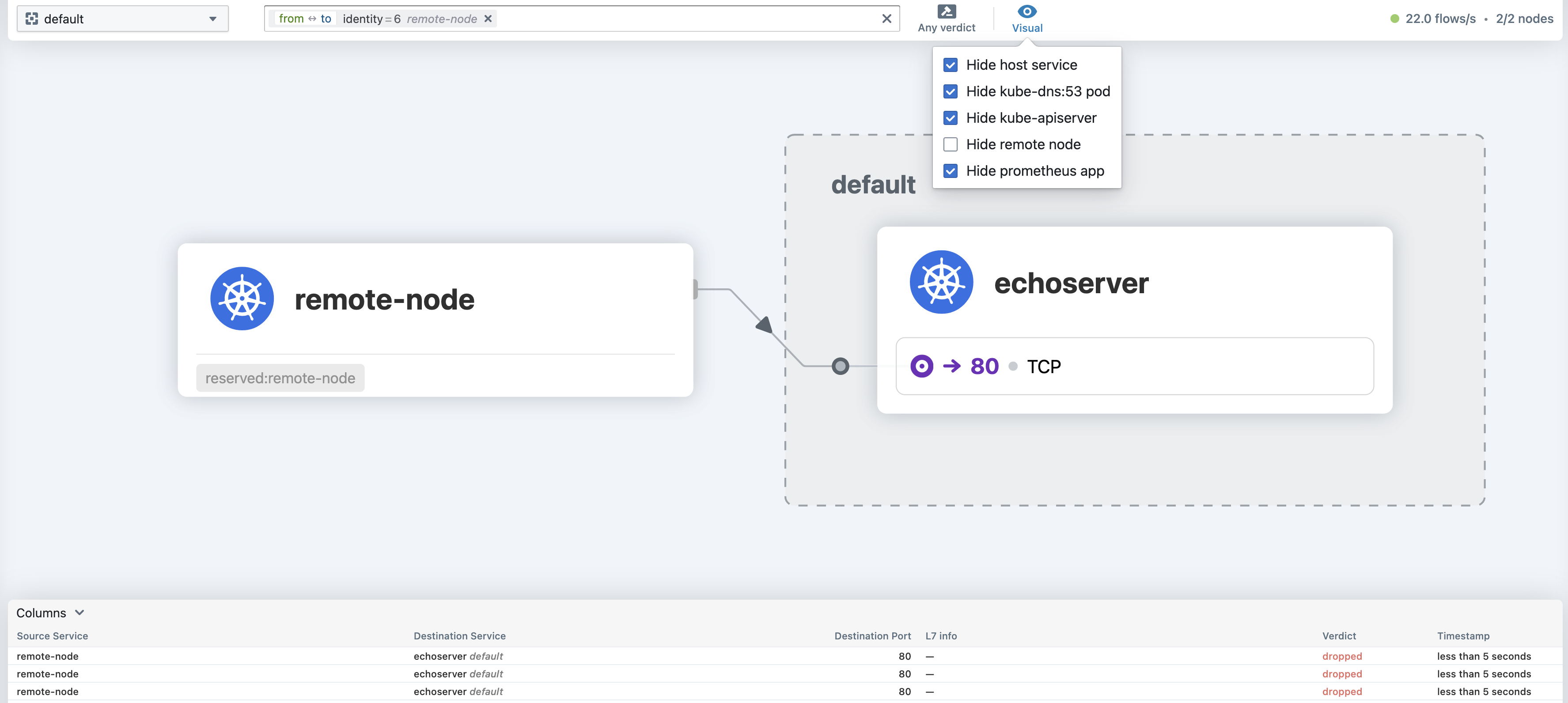

You can also view the graph with dropped connections in the hubble UI. The result should look like this:

Complete

You need to create network policy for MinIO Pods. For this policy, isolate the MinIO Pods from all connections except ones from history-server Pod within the same production namespace.

NB: please use minio-network-policy name.

Cleanup¶

You can safely remove the following resources as they are for testing purposes only and don't affect the lab scoring:

- Service

echoserver-service-nodeportin thedefaultnamespace; - StatefulSet

postgresql-hl-testand servicepostgresql-hlin thetestnamespace; - NetworkPolicy

echoserver-network-policyin thedefaultnamespace; - Deployment

nginxin thedefaultnamespace - Namespace

k8s-lab5.